My PhD student Qichao Lan is at SMC in Malaga this week, presenting the paper:

Lan, Qichao, Jim Tørresen, and Alexander Refsum Jensenius. “RaveForce: A Deep Reinforcement Learning Environment for Music Generation.” Proceedings of the Sound and Music Computing Conference. Malaga, 2019.

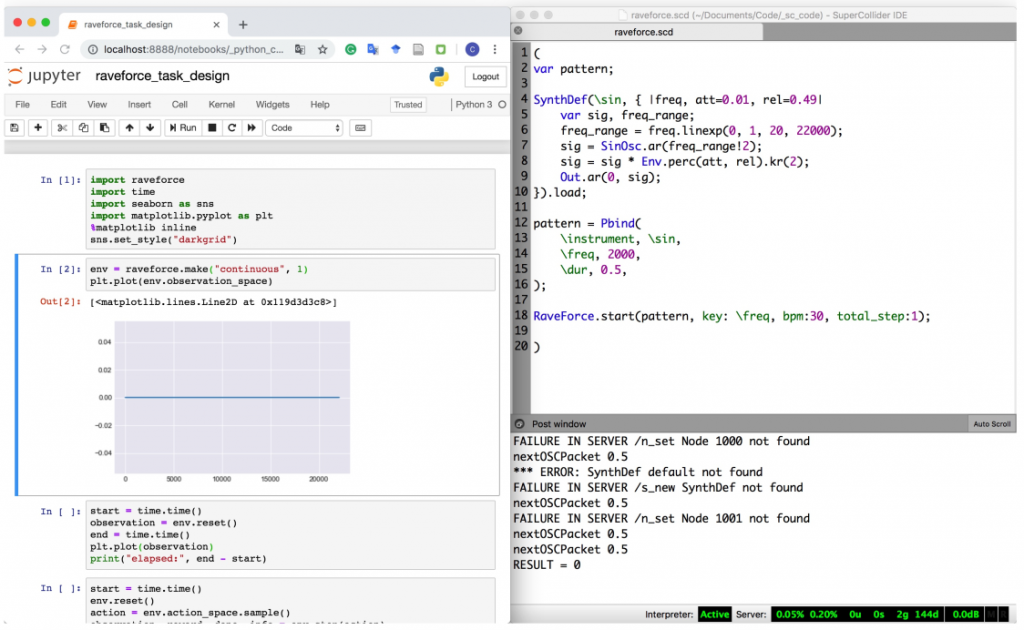

The framework that Qichao has developed runs nicely with a bridge between Jupyter Notebook and SuperCollider. This opens for lots of interesting experiments in the years to come.

Abstract:

RaveForce is a programming framework designed for a computational music generation method that involves audio sample level evaluation in symbolic music representation generation. It comprises a Python module and a SuperCollider quark. When connected with deep learning frameworks in Python, RaveForce can send the symbolic music representation generated by the neural network as Open Sound Control messages to the SuperCollider for non-realtime synthesis. SuperCollider can convert the symbolic representation into an audio file which will be sent back to the Python as the input of the neural network. With this iterative training, the neural network can be improved with deep reinforcement learning algorithms, taking the quantitative evaluation of the audio file as the reward. In this paper, we find that the proposed method can be used to search new synthesis parameters for a specific timbre of an electronic music note or loop.