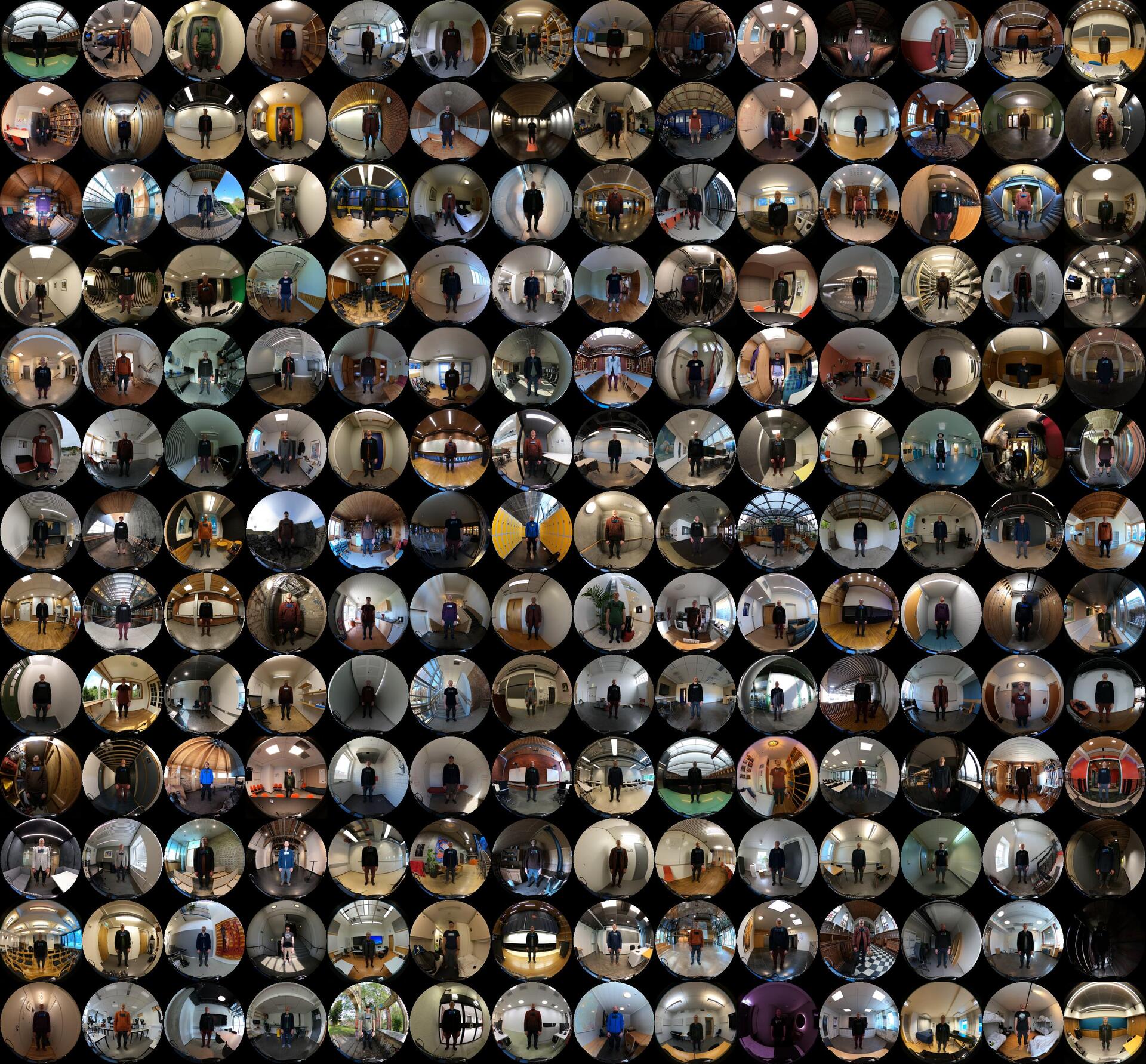

365 Days of Still Standing

Today is New Year’s Eve, and I have done my 365th standstill of the year. I began my year-long #StillStanding project on 1 January this year, and I am happy to report that I managed to conclude the project as planned! A few days were more challenging than others, but I am pleased I made recordings every day. I wrote a blog post after the first 100 days and a video of the first half year....