Professor of music technology and Director of RITMO Centre for Interdisciplinary Studies in Rhythm, Time and Motion, University of Oslo. I study music-related body motion in the fourMs Lab. My new book Sound Actions summarizes my experimentation with untraditional instruments. #StillStanding every day.

Recent Posts

Tips for conference presentations

The doctoral and postdoctoral research fellows sent a questionnaire to the PIs at RITMO the other day. The aim was to collect information about conference presentations. It was a great set of questions, so I am posting my answers here, a little more elaborated than in the questionnaire.

Being nervous Have you ever been nervous to present at a conference? How did you cope with this?

Yes, I have been nervous many times.

read more

A Year of Sonic Rhythms

Yesterday, I completed my year-long StillStanding project. This was my second year-long project. In 2022, I recorded one sound action daily. When I reached the end of 2022, I found it to be an efficient way of collecting data. My 2023 project confirmed this belief, so I am inspired to try another year-long experiment.

Focusing on AMBIENT I will spend the coming month completing the Still Standing manuscript. I am saying this loud now to put pressure on myself and help the world understand why I must focus on writing.

read more

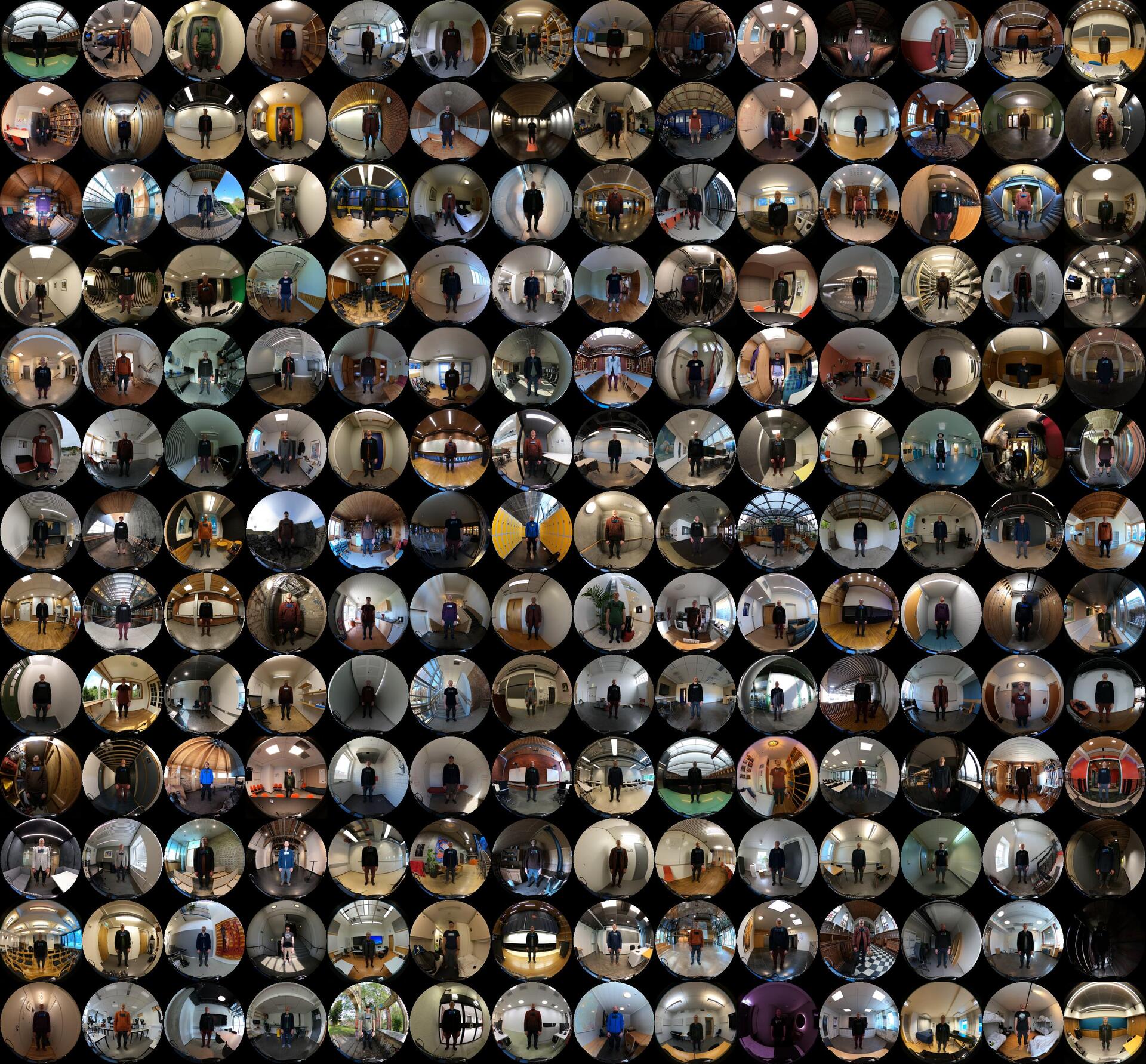

365 Days of Still Standing

Today is New Year’s Eve, and I have done my 365th standstill of the year. I began my year-long #StillStanding project on 1 January this year, and I am happy to report that I managed to conclude the project as planned! A few days were more challenging than others, but I am pleased I made recordings every day.

I wrote a blog post after the first 100 days and a video of the first half year.

read more

Using ChatGPT to shorten my bio

I continue exploring how ChatGPT can be used meaningfully in my daily life. Today, I have looked at how it can help me rewrite my bio.

Whenever I do some public speaking, I typically need to submit a bio for the event page. Often, the organizers ask about a particular number of words (60, 100, 200, etc.). This falls within the category of “publicazione con variazione”, and is mainly an annoying and time-consuming task.

read more

FAIR data as an enabler for research-led education

As part of my duties as a Norwegian member of EUA’s Open Science Expert Group, I was asked to write an “expert voice” on how to think about FAIR data from an educational perspective. Below is a copy of my short article.

How Findable, Accessible, Interoperable and Reusable data enables research-led education FAIR data is an essential component of the open research ecosystem. In this article, Alexander Refsum Jensenius argues that “FAIRification” can also benefit research-based and research-led education, providing opportunities to bring together different university missions.

read more

Which image format should I use?

Many image file formats exist, but which ones are better for what task? Here is a quick overview in my little series of PhD advice blog posts (the previous being tips on dissertation writing and the public PhD defense).

Two different image types When choosing a file format for your image, the first thing is to figure out whether you are dealing with a raster image (photos) or a vector image (line illustrations).

read more

Reflections on Open Innovation

I have been challenged to talk about innovation in the light of Open Research today. This blog post is a write-up of some ideas as I prepare my slides. Looking at my blog, I have only written about innovation once in the past, in connection to a presentation in Brussels about Open Innovation. Then, I highlighted how my fundamental music research led to developing a medical tool. That is an example of “classic” innovation, developing software that solves a problem.

read more

Tips for a public PhD defense

Yesterday, I gave some PhD dissertation advice. Today, I will present some tips for PhD candidates ready for public defense.

In Norway, the public defense is a formal event with colleagues, friends, and family present—we typically also stream them on YouTube. The good thing is that when you are ready for the defense, the dissertation has already been accepted. Now it is time to show lecturing skills in the trial lecture and the ability to engage with peers in the disputation.

read more

What should a PhD dissertation look like?

I am supervising several PhD fellows at the moment and have found that I repeat myself in the one-to-one meetings. So I will write blog posts summarizing general advice I give everyone. This post deals with what a PhD dissertation should look like.

The classic Ph.D. dissertation Dear PhD fellow (in Norway, PhD fellows are employees, not students): All dissertations are different, yours included. You can write it however you want as long as it is good!

read more

Making image parts transparent in Python

As part of my year-long StillStanding project, I post an average image of the spherical video recordings on Mastodon daily.

Average images The average image is similar to an “open shutter” technique in photography; it overlays all the frames in a video. The result is an image that shows the most prominent parts of the video recording. This is ideal for my StillStanding recordings, because the technique effectively “removes” objects that appear in the recording for a short period of time.

read more