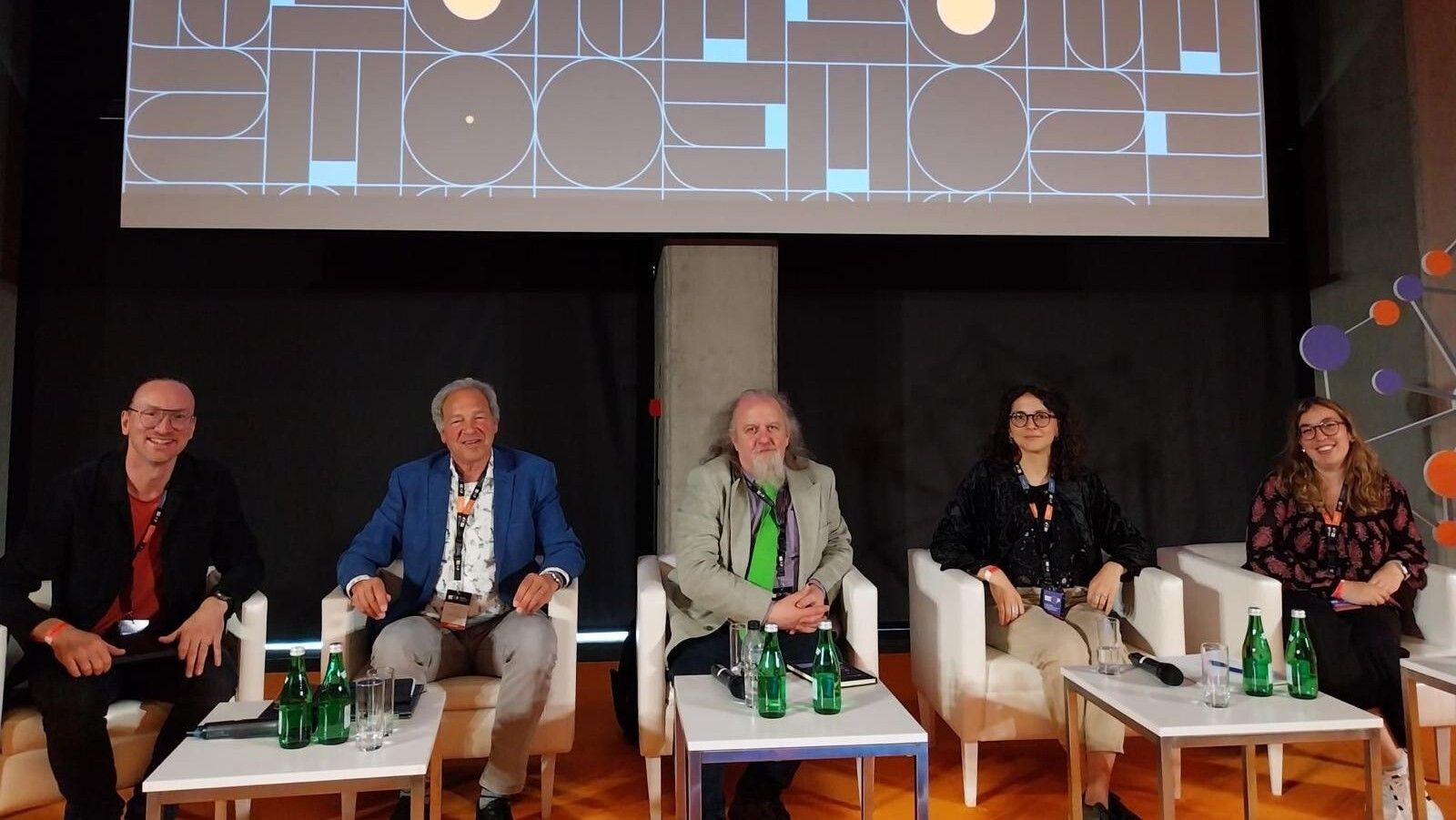

New research data careers

There is an increasing focus on making data openly available, or at least archived according to the FAIR principles. This has also led to the need for more knowledge and skills in data management at all levels. For some, this is becoming a career path on its own. That was the topic of the panel session “Fostering the emergence of new research data careers” I moderated at EuroScience Open Forum (ESOF2024) in Katowice, Poland, today....