One of my reasons for developing motiongrams was to have a solution for visualising movement in a way that would be compatible to spectrograms. That way it would be possible to study how movement is evolving over time, in relation to how the audio is changing over time.

In my current implementation of motiongrams in Max/MSP/Jitter (and partially in EyesWeb), there has been no way to synchronise with a spectrogram. The problem was that the built-in spectrogram in Max/MSP was running much faster than the motiongram, and they was therefore out of sync from the start. The same was the case in EyesWeb.

The idea has always been to create a solution for outputting motiongrams and spectrograms with the same time coding and in one display, and I am happy to announce that we solved the problem during a workshop at SMC today. The solution is to use the same counter to drive the drawing of both a spectrogram and a motiongram, and then to concatenate the two images. I have developed a small program in Max/MSP/Jitter that does this (which will hopefully make it into Jamoma in not too long), and Paolo Coletti has written a couple of EyesWeb blocks that will make it into the next release.

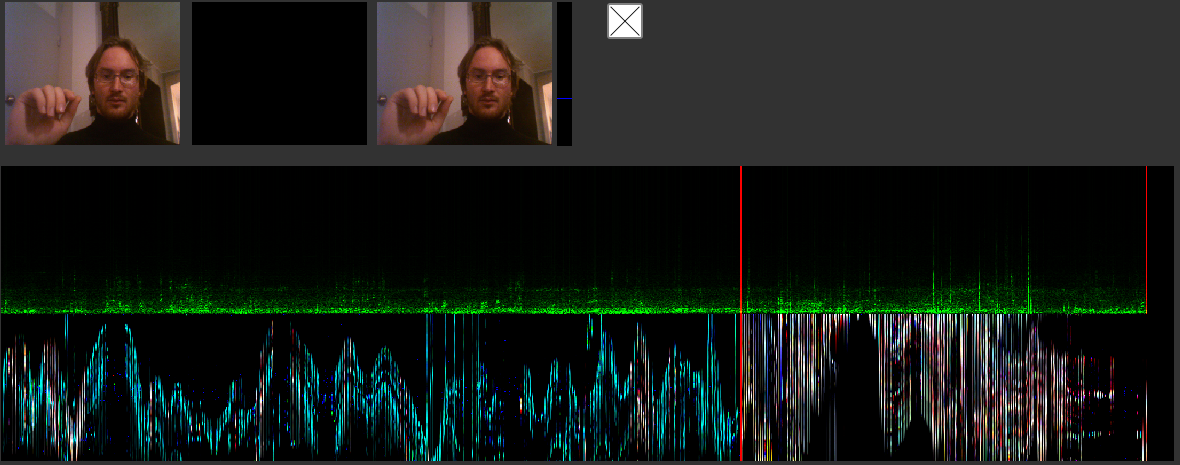

Below is a screenshot showing the coupled spectrogram and motiongram. I am not particularly happy with the colours in this implementation, so this is something we will have to test more in the future.

{width=“400”}

{width=“400”}